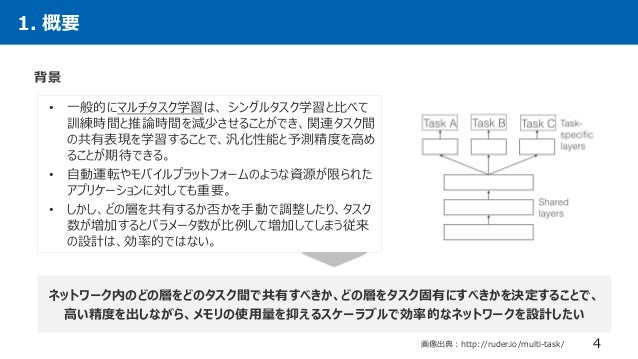

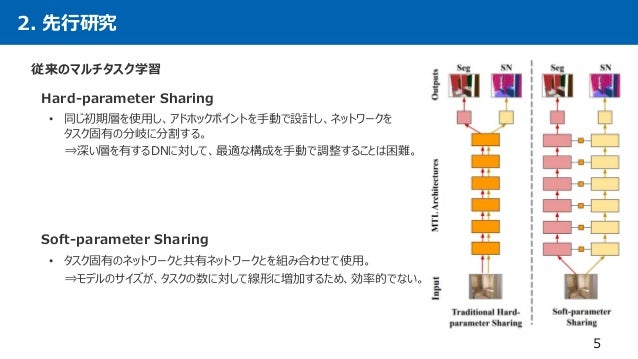

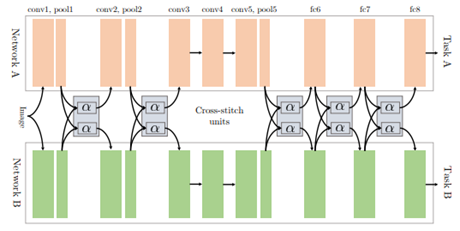

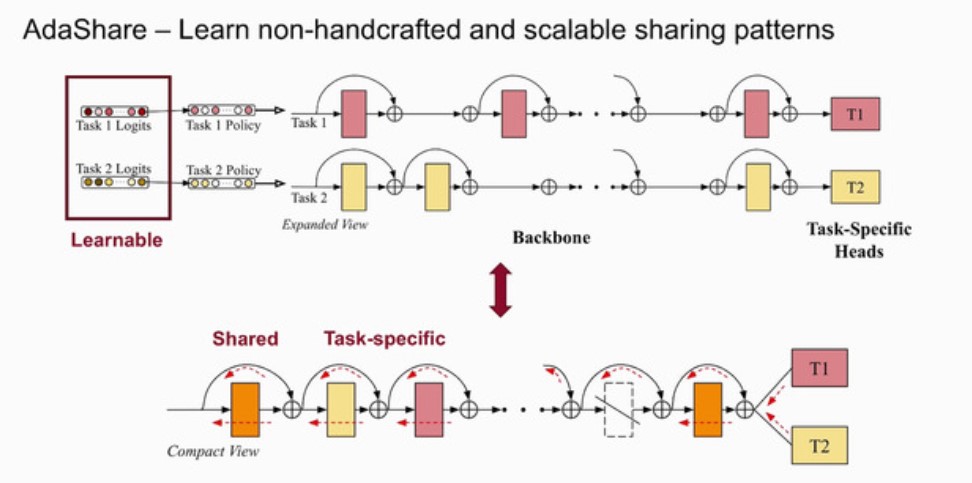

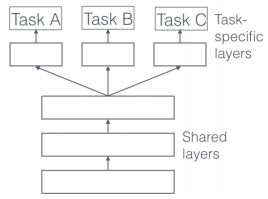

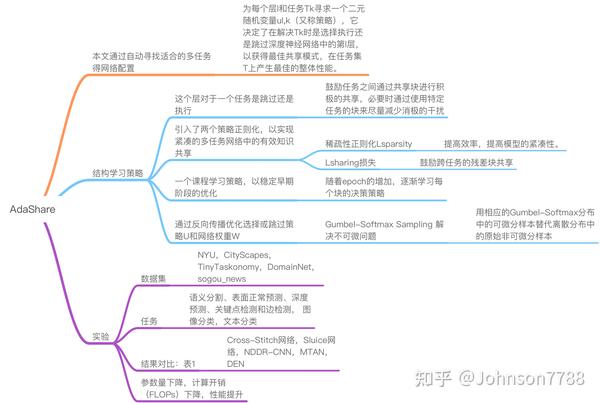

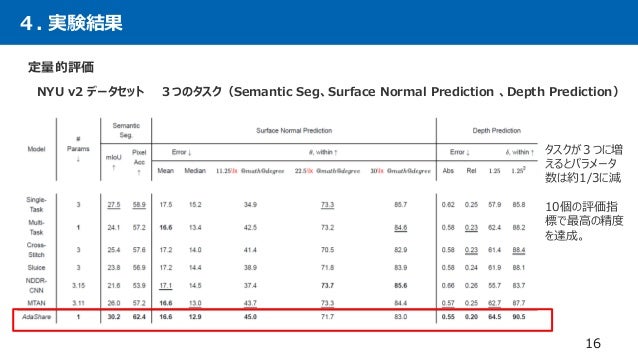

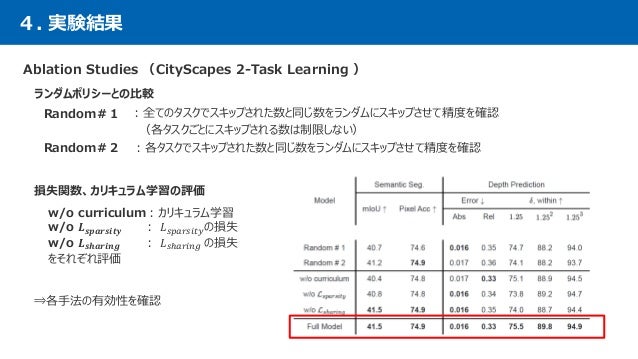

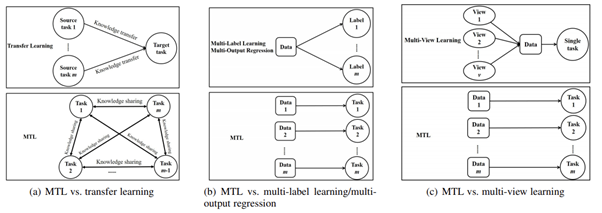

AdaShare Learning what to share for efficient deep multitask learning In Advances in Neural Information Processing Systems, Shikun Liu, Edward Johns, and Andrew J Davison Endtoend multitask learning with attention In IEEE Conference on Computer Vision and Pattern Recognition, 19 AdaShare Learning What to Share for Efficient Deep MultiTask Learning (X Sun et al, ) EndtoEnd MultiTask Learning with Attention (S Liu et al, 19) Which Tasks Should Be Learned Together in Multitask Learning?AdaShare Learning What To Share For Efficient Deep MultiTask Learning Introduction Hardparameter Sharing AdvantagesScalable DisadvantagesPreassumed tree structures, negative transfer, sensitive to task weights Softparameter Sharing AdvantagesLessnegativeinterference (yet existed), better performance Disadvantages Not Scalable

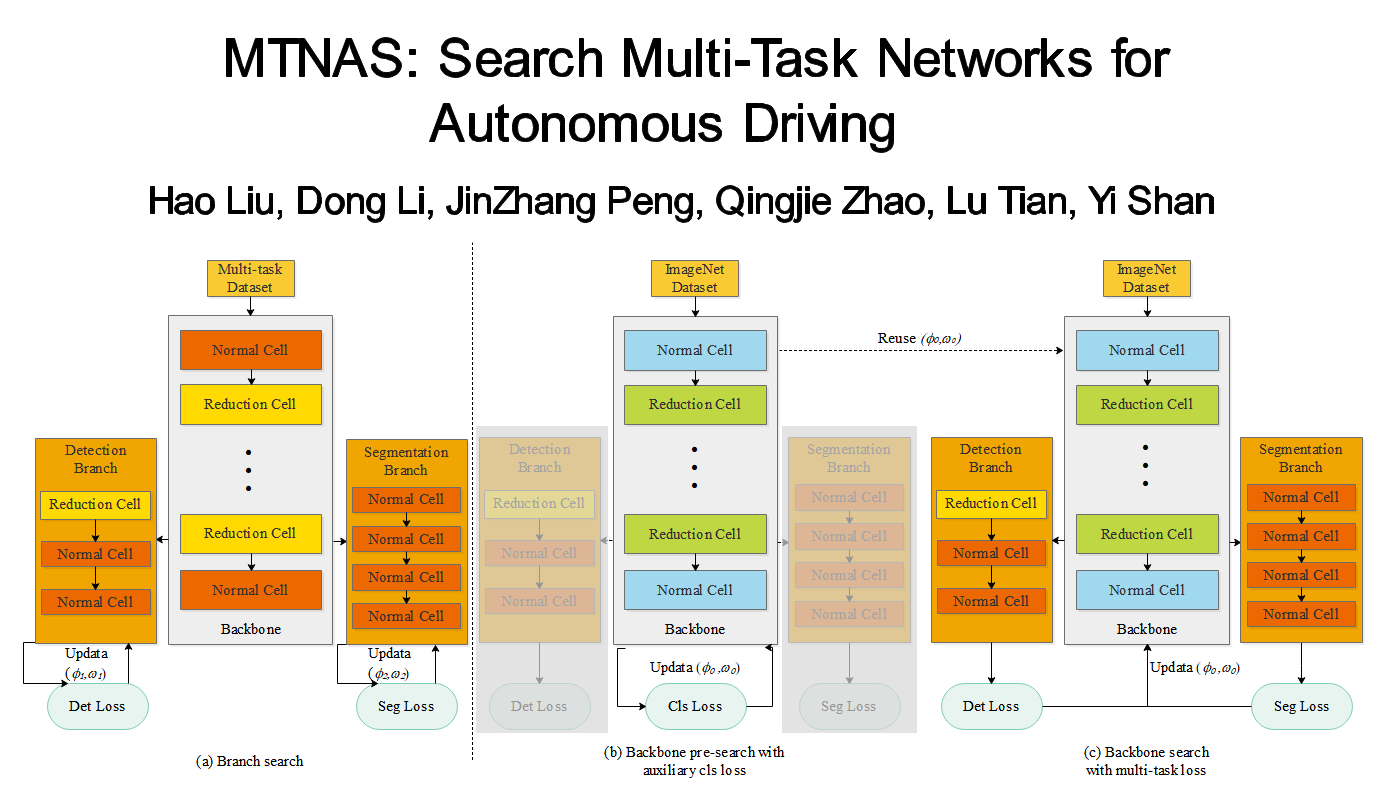

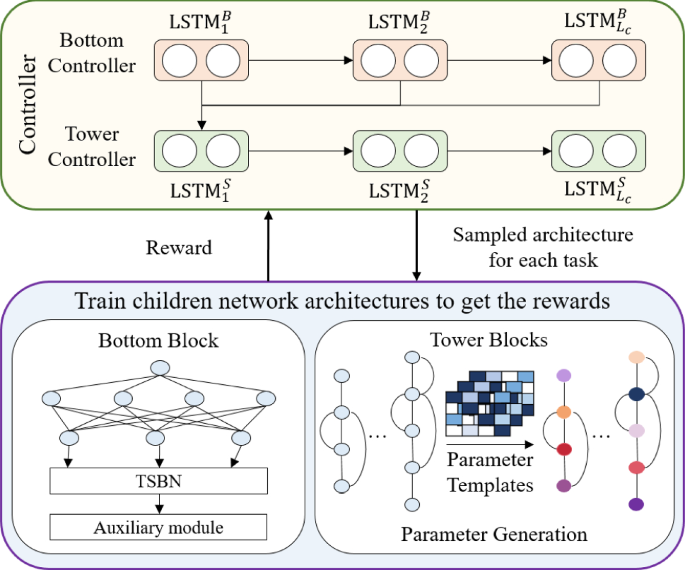

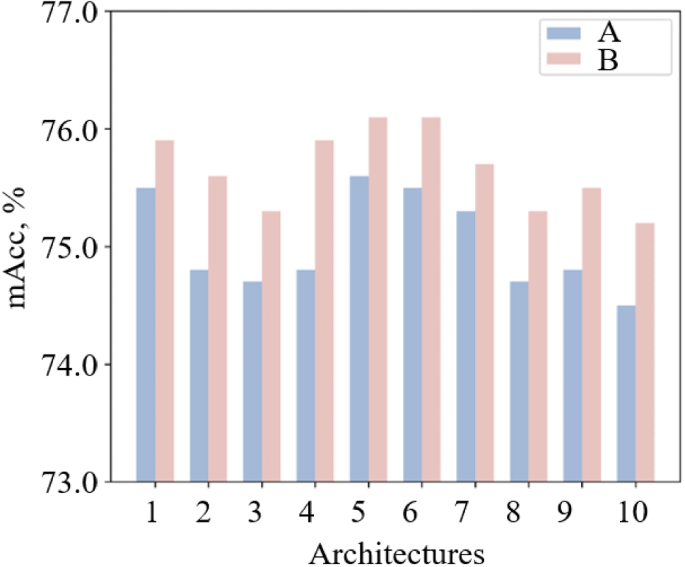

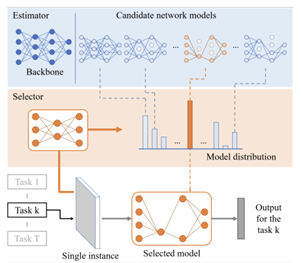

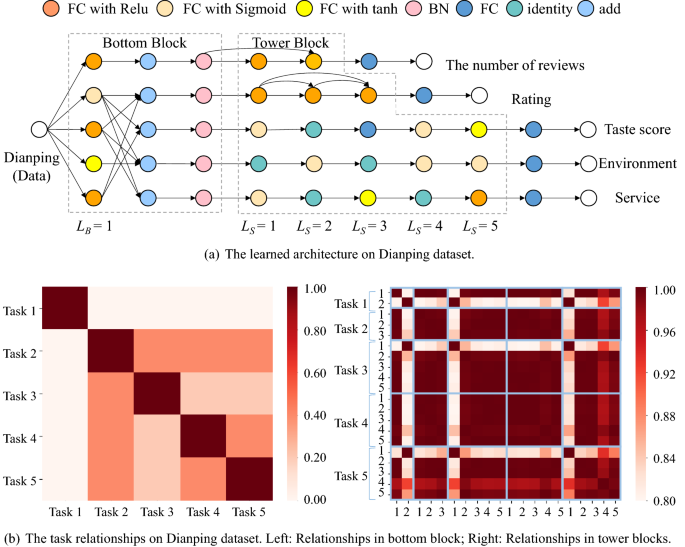

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Adashare learning what to share for efficient deep multi-task learning

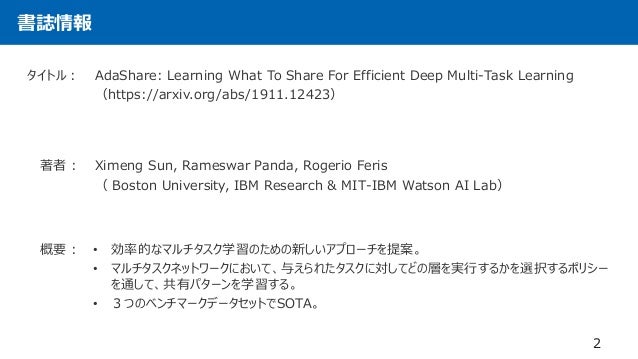

Adashare learning what to share for efficient deep multi-task learning-Arxiv https AdaShare Learning What To Share For Efficient Deep MultiTask Learning intro Boston University & IBM Research & MITIBM Watson AI LabHierarchical Granularity Transfer Learning NeurIPS 35 COOptimal Transport NeurIPS OT 34 A Combinatorial Perspective on Transfer Learning NeurIPS 33 AdaShare Learning What To Share For Efficient Deep MultiTask Learning NeurIPS multitask learning

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

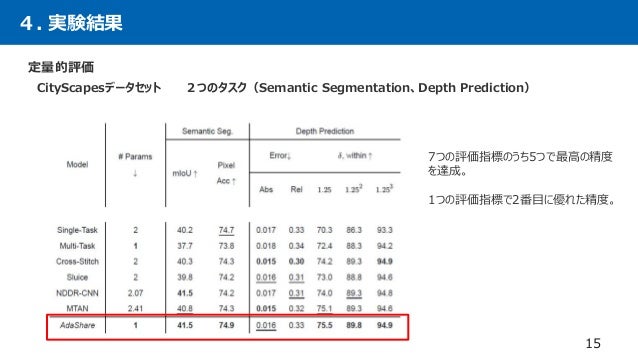

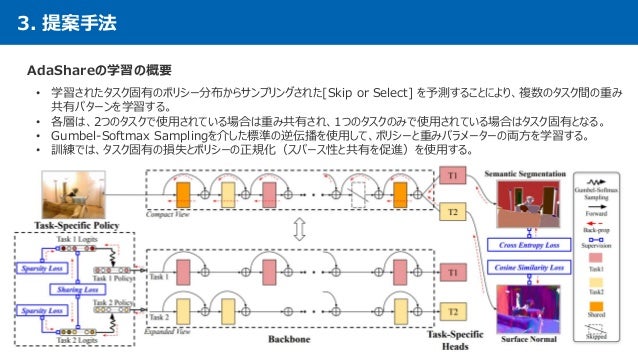

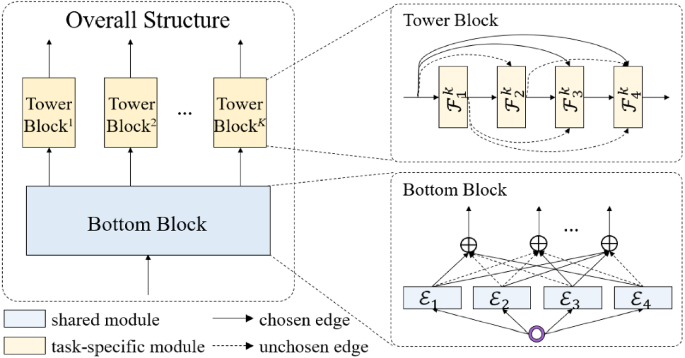

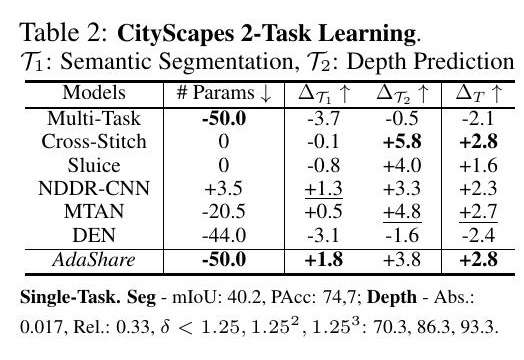

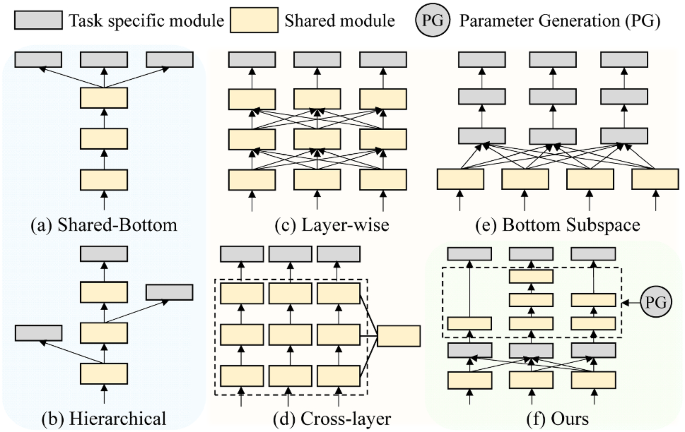

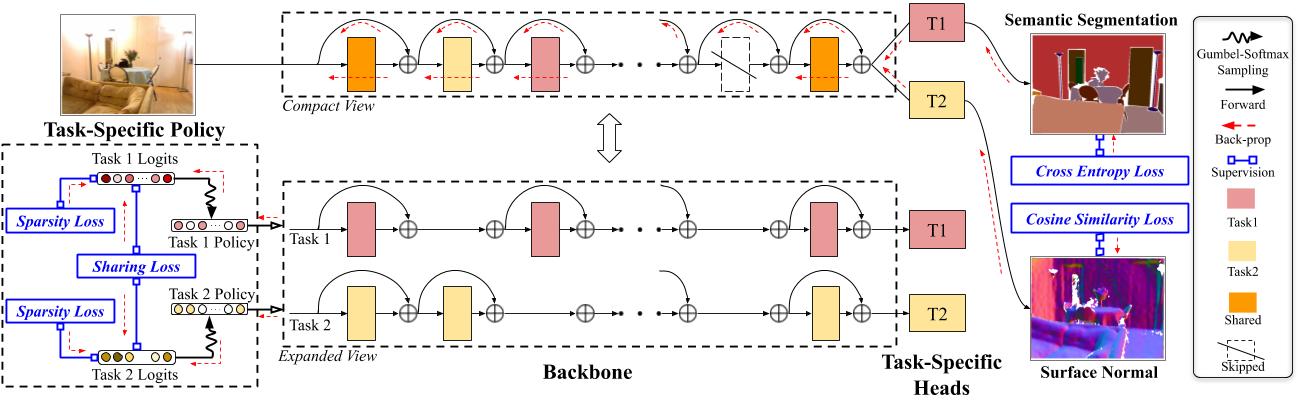

Adashare Learning what to share for efficient deep multitask learning arXiv preprint arXiv (19) Google Scholar;Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism To this end, we propose AdaShare, a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy, while restricting the memory footprint as much as possible

Learning What To Share For Efficient Deep MultiTask Learning AdaShare is a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy 10 August 21 Load More s9 Dec AIR Seminar "AdaShare Learning What To Share For Efficient Deep MultiTask Learning" 9 Dec Poster Session "Computational Tools for Data Science"Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism

Ximeng Sun, Rameswar Panda, Rogerio Feris, and Kate Saenko Adashare Learning what to share for efficient deep multitask learning arXiv preprint arXiv, 19 Google ScholarThe typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branchMultiview surveillance video summarization via joint embedding and sparse optimization Adashare Learning what to share for efficient deep multitask learning X Sun, R Panda, R Feris, K Saenko 19 Arnet Adaptive frame resolution for efficient action recognition Y Meng, CC Lin, R Panda, P Sattigeri, L Karlinsky, A Oliva, K Saenko

2

2

In this paper, we present two simple ways to learn fair metrics from a variety of data types We show empirically that fair training with the learned metrics leads to improved fairness on three machine learning tasks susceptible to gender and racial biases1 We also provide theoretical guarantees on the statistical performance of both approachesAdaShare Learning What To Share For Efficient Deep MultiTask Learning X Sun, R Panda, R Feris, and K Saenko NeurIPS See also Fullyadaptive Feature Sharing in MultiTask Networks (CVPR 17) Project PagePolyharmonic Splines AAAI Workshop on MetaLearning for Computer Vision, 21 12 X Sun, R Panda, R Feris, and K Saenko AdaShare Learning What to Share for Efficient Deep MultiTask Learning Conference on Neural Information Processing Systems (NeurIPS ) 13 Y

Multimodal Learning Archives Mit Ibm Watson Ai Lab

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an adhoc point or through using separate taskspecific networks with an additional feature sharing/fusion mechanism Unlike existing methods, we Multitask learning (Caruana, 1997) has experienced rapid growth in recent years Because of the breakthroughs in the performance of individually trained singletask neural networks, researchers have shifted their attention towards training networks that are able to solve multiple tasks at the same timeOne clear benefit of such a system is reduced latency whereResearch in Political Theory Workshop The Research in Political Theory workshop serves as a forum for the presentation of research by faculty and graduate students in political theory Organizer Lida Maxwell Workshops will be held at the time, date and location noted below Links to Getachew's chapters Chapter 1 and Chapter 2

2

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Multitask learning is an open and challenging problem in computer vision Unlike existing methods, we propose an adaptive sharing approach, called AdaShare, that decides what to share across which tasksHongyan Tang, Junning Liu, Ming Zhao, and Xudong Gong Progressive Layered Extraction (PLE) A Novel MultiTask Learning (MTL) Model for Personalized RecommendationsAdaShare Learning What To Share For Efficient Deep MultiTask Learning AdaShare is a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy, while restricting the

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Many task learning with task routing In Proc 19 IEEE Int Conf on Computer Vision (ICCV'19), pages , 19 Google Scholar Cross Ref;AdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun 1Rameswar Panda 2Rogerio Feris Kate Saenko; Learning Multiple Tasks with Deep Relationship Networks arxiv https Parameter Efficient Multitask And Transfer Learning intro The University of Chicago & Google;

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

2

6 Dec Why Cities Lose The Deep Roots of the UrbanRural Political Divide;原文:AdaShare Learning What To Share For Efficient Deep MultiTask Learning 作者 Ximeng Sun1 Rameswar Panda2 论文发表时间: 年11月 代码:GitHub sunxm2357/AdaShare AdaShare Learning What To Share For Efficient Deep MultiTask LearningAdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun, Rameswar Panda, Rogerio Feris, Kate Saenko Neural Information Processing Systems (NeurIPS), Project Page Supplementary Material

Branched Multi Task Networks Deciding What Layers To Share Deepai

Kate Saenko Proud Of My Wonderful Students 5 Neurips Papers Come Check Them Out Today Tomorrow At T Co W5dzodqbtx Details Below Buair2 Bostonuresearch Twitter

In this paper, we propose a novel multitask deep learning (MTDL) algorithm for cancer classification The structure of the network is shown in Fig 3The proposed MTDL shares information across different tasks by setting a shared hidden unitsIn Fig 3, the red shapes signify shared hidden units of all the task sources in each layer, and the triangle, square and pentagon AdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun, Rameswar Panda, Rogerio Feris, Kate Saenko link 49 Residual Distillation Towards Portable Deep Neural Networks without Shortcuts Guilin Li, Junlei Zhang, Yunhe Wang, Chuanjian Liu, Matthias Tan, Yunfeng Lin, Wei Zhang, Jiashi Feng, Tong Zhang link 501Boston University, 2MITIBM Watson AI Lab, IBM Research {sunxm, saenko}@buedu, {rpanda@, rsferis@us}ibmcom Abstract Multitask learning is an open and challenging problem in computer vision

Adashare Learning What To Share For Efficient Deep Multi Task Learning Issue 1517 Arxivtimes Arxivtimes Github

Ximeng Sun Catalyzex

AdaShare Public AdaShare Learning What To Share For Efficient Deep MultiTask Learning Python 46 7 TAI_video_frame_inpainting Public Inpainting video frames via a TemporallyAware Interpolation network Python 23 3 mcnet_pytorch Public Python 11 Clustered multitask learning A convex formulation In NIPS, 09 • 23 Zhuoliang Kang, Kristen Grauman, and Fei Sha Learning with whom to share in multitask feature learning In ICML, 11 • 31 Shikun Liu, Edward Johns, and Andrew J Davison Endtoend multitask learning with attention In CVPR, 19AdaShare Learning What To Share For Efficient Deep MultiTask Learning Ximeng Sun 1Rameswar Panda 2Rogerio Feris Kate Saenko;

Adashare Learning What To Share For Efficient Deep Multi Task Learning

2

AdaShare Learning What To Share For Efficient Deep MultiTask Learning Review 1 Summary and Contributions This work proposes to search feature sharing strategy for multitask learning It relies on standard backpropagation to jointly learn feature sharing policy and network weights The paper introduces a method for multi task learning6 Dec Managing Misinformation About Science;Overview AdaShare is a novel and differentiable approach for efficient multitask learning that learns the feature sharing pattern to achieve the best recognition accuracy, while restricting the memory footprint as much as possible

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Pdf Saliency Regularized Deep Multi Task Learning

Casting the problem as a weakly supervised learning problem, this work proposes a flexible deep 3D CNN architecture to learn the notion of importance using only videolevel annotation, and without any humancrafted training dataWe propose a principled approach to multitask deep learning which weighs multiple loss functions by considering the homoscedastic uncertainty of each task to share for efficient deep multi9 Dec Sociology Seminar Series;

Multi Task Learning With Deep Neural Networks A Survey Arxiv Vanity

Auto Virtualnet Cost Adaptive Dynamic Architecture Search For Multi Task Learning Sciencedirect

AdaShare Learning What To Share For Efficient Deep MultiTask Learning (Supplementary Material) X Sun, R Panda, R Feris, K Saenko The system can't perform the operation nowI am a Research Staff Member at MITIBM Watson AI Lab, Cambridge, where I work on solving real world problems using computer vision and machine learning In particular, my current focus is on learning with limited supervision (transfer learning, fewshot learning) and dynamic computation for several computer vision problems Abstract Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism

D How To Do Multi Task Learning Intelligently R Machinelearning

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

The typical way of conducting multitask learning with deep neural networks is either through handcrafting schemes that share all initial layers and branch out at an adhoc point or through using separate taskspecific networks with an additional feature sharing/fusion mechanismMultitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism1Boston University, 2MITIBM Watson AI Lab, IBM Research {sunxm, saenko}@buedu, {rpanda@, rsferis@us}ibmcom Abstract Multitask learning is an open and challenging problem in computer vision

2

Kate Saenko On Slideslive

Large Scale Neural Architecture Search with Polyharmonic Splines AAAI Workshop on MetaLearning for Computer Vision, 21 X Sun, R Panda, R Feris, and K Saenko AdaShare Learning What to Share for Efficient Deep MultiTask Learning Conference on Neural Information Processing Systems (NeurIPS )Abstract Temporal modelling is the key for efficient video action recognition While understanding temporal information can improve recognition accuracy for dynamic actions, removing temporal redundancy and reusing past features can significantly save computation leading to efficient Multitask learning is an open and challenging problem in computer vision The typical way of conducting multitask learning with deep neural networks is either through handcrafted schemes that share all initial layers and branch out at an adhoc point, or through separate taskspecific networks with an additional feature sharing/fusion mechanism

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Adashare Learning What To Share For Efficient Deep Multi Task Learning

2

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

2

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Pdf Saliency Regularized Deep Multi Task Learning

2

2

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

2

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

2

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Adashare Learning What To Share For Efficient Deep Multi Task Learning

Adashare 高效的深度多任务学习 知乎

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Auto Virtualnet Cost Adaptive Dynamic Architecture Search For Multi Task Learning Sciencedirect

2

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

2

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

How To Do Multi Task Learning Intelligently

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Learned Weight Sharing For Deep Multi Task Learning By Natural Evolution Strategy And Stochastic Gradient Descent Deepai

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Home Rogerio Feris

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

2

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

Rethinking Hard Parameter Sharing In Multi Task Learning Deepai

Papertalk The Platform For Scientific Paper Presentations

Dl輪読会 Adashare Learning What To Share For Efficient Deep Multi Task

2

Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning Arxiv Vanity

논문 리뷰 Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

2

2

2

Rethinking Hard Parameter Sharing In Multi Task Learning Deepai

Adashare 高效的深度多任务学习 知乎

Deep Multi Task Learning With Flexible And Compact Architecture Search Springerlink

Adashare Learning What To Share For Efficient Deep Multi Task Learning

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Adashare Learning What To Share For Efficient Deep Multi Task Learning Papers With Code

Adashare Re Train Py At Master Sunxm2357 Adashare Github

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

Adashare Learning What To Share For Efficient Deep Multi Task Learning Deepai

Neurips 论文推荐丨adashare Learning What To Share For Efficient Deep Multi Task Learning 智源社区

2

Adashare Learning What To Share For Efficient Deep Multi Task Learning Arxiv Vanity

Pdf Adashare Learning What To Share For Efficient Deep Multi Task Learning Semantic Scholar

Ximeng Sun Catalyzex

Adashare Learning What To Share For Efficient Deep Multi Task Learning Request Pdf

Kdst Adashare Learning What To Share For Efficient Deep Multi Task Learning Nips 논문 리뷰

2

Kate Saenko Proud Of My Wonderful Students 5 Neurips Papers Come Check Them Out Today Tomorrow At T Co W5dzodqbtx Details Below Buair2 Bostonuresearch Twitter

Pdf Dselect K Differentiable Selection In The Mixture Of Experts With Applications To Multi Task Learning

Adashare Learning What To Share For Efficient Deep Multi Task Learning Pythonrepo

Adashare Learning What To Share For Efficient Deep Multi Task Learning

Learning To Branch For Multi Task Learning Deepai

2

Multi Task Learning學習筆記 紀錄學習mtl過程中讀過的文獻資料 By Yanwei Liu Medium

How To Do Multi Task Learning Intelligently

Kate Saenko On Slideslive

2

0 件のコメント:

コメントを投稿